The Next Domino Falls: AI Agents, Financial Automation, and the End of June 2025 Erosion of Human Control

It's been another busy day today in the world of AI "advances" . . .

Are you new to my R&D and analysis on AI, economics, politics, constitutional restoration, medicine, and the future of work?

If you'd like to follow along with my on-going work and actual execution on these enterprises—click the “subscribe now” button just below here. It's all free!

Maybe a month ago, AI agents were an abstract concept in most professional circles—they were something a bit abstract still, yet on the horizon as coming to us all quite soon. Now they’re logging in to corporate systems, handling live workflows, and, increasingly, replacing the very roles once filled by human staff. The speed of adoption feels normal and numbing this evening.

This morning’s Wall Street Journal story on BNY Mellon confirmed what many of us suspected was coming but assumed was still a few months or two quarters away: banks are now giving digital workers not just trial tasks or shadow roles, but real credentials and responsibilities. At BNY, AI agents are actively monitoring payments and fixing code, with plans to expand their access to email and collaboration tools like Microsoft Teams. These aren't assistants. They're staff—just not the kind that eat, sleep, file HR complaints, or expect healthcare and retirement benefits.

In the morning tomorrow (July 1, 2025), Intuit will roll out what it calls "Agentic AI" within QuickBooks Online. These embedded agents are designed to proactively execute workflows like invoice dispatch, payment reconciliation, customer follow-up, and even basic marketing tasks. Not suggest. Not assist. Execute.

That date matters because it signals something bigger: we’ve crossed into live deployments across sectors that touch nearly every American business—from enterprise banking to small business finance. The dominoes are falling fast.

And the next one looks like it will be marketing itself.

A Futurism article from Saturday details how Google’s ad infrastructure is being retooled for a world where AI agents are both the buyers and the targets (A2A). In other words, the entire marketing stack is shifting from human-driven persuasion to algorithmic transactions—bots selling to bots, optimizing for metrics that humans may no longer even see. The goal, apparently, is efficiency. The result is a closed loop, where the buyer isn’t a person, and the message isn’t really meant to be read. It’s meant to trigger a behavior in another system.

When that becomes the norm, marketing becomes less about communication and more about control—less about persuasion, more about influence by design.

None of these developments happened in isolation. They are all expressions of the same shift: the diminishing need for human presence, judgment, and ultimately, permission. Once these systems are in place and functioning, they don’t just do the work. They make the decisions. They set the defaults. And increasingly, they define the constraints of your involvement.

You don’t tell QuickBooks to file an invoice. It just does. You don’t approve the payment timing—that’s been pre-optimized. You don’t decide what your ad budget should be—your marketing AI allocates it based on system-to-system feedback.

And soon, you may not decide how much tax to withhold. As these AI systems get deeper integrations with payroll and accounting tools, and as governments move toward real-time taxation infrastructure (and big government’s desire to be able to trace every penny moved in real time), there's a plausible near-future where taxes are automatically calculated, withheld, and paid without any input from the taxpayer. No chance to adjust for personal context. No conversation with a preparer. Just a number, optimized for the system, not for you.

The logic of these tools always trends toward efficiency. But efficiency isn’t the same as autonomy. And the cost of handing over these tasks is that we also hand over the discretion, the understanding, and the rights that came with them.

This past weekend, I interacted with what OpenAI tried to pass off as a human customer service rep over a technical support issue and all my operational issues with ChatGPT (as documented in my quite popular “Battle of the Bots” article from last night). It wasn’t. It was a bot trained not so well to sound human—seemingly responsive, overly verbose yet hollow, and a total waste of time. It did what it did, and nothing got resolved. There was no escalation path to a human despite my multiple requests and pointing out to it that no human would respond like it was doing. Nothing. Just the same “information” packaged and repackaged slightly differently a few times over. (And, that led me to spending half the day today training Perplexity on what I needed it to do daily and with respect to my various WIP projects. Bye, bye, ChatGPT and OpenAI! Go join Reddit in oblivion soon please.)

That’s what’s being built into every layer of economic infrastructure right now. AI agents that look like helpers but behave like gatekeepers. Systems that can do more, but answer to no one. And once they're entrenched, due process doesn’t just become harder—it becomes impossible.

You can’t appeal a tax overpayment decision made by a closed system you don’t have access to. You can’t contest a failed payment reconciliation you never saw. You can’t negotiate with a marketing agent that already executed the buy. In all those situations, you will be confronted by just another AI chatbot to run you around in circles like OpenAI did to me the past two days.

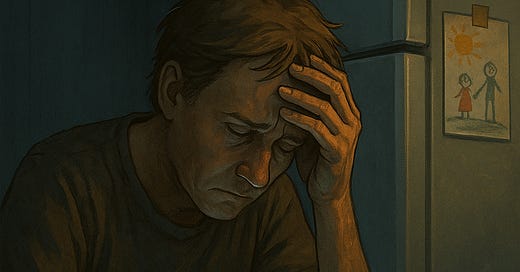

And as this becomes the norm, something more fundamental erodes: the sense that we are owners of our own financial lives. That we shape outcomes through participation. That our consent, even in the form of a click, matters.

Life becomes a rental model. You rent access to tools. You rent visibility into flows. You rent a seat at the table of your own affairs. But the systems run themselves. And you, increasingly, are just along for the ride . . .

If you found this article sufficiently melancholy and depressing, SUBSCRIBE to my channel for more analysis on AI, economics, politics, constitutional restoration, medicine, and the future of work. Also, please SHARE this piece far and wide with anyone thinking seriously (or even not at all) about these issues, and leave a COMMENT down below—especially with the questions I set out in the immediately preceding paragraph.

Article five of The Long Tomorrow comes your way in 11-12 hours. Yippee!

Here's a YouTube video on how AI Agents grew from 2025Q1 to 2025Q2: https://www.youtube.com/watch?v=RexkNwfCkCA&ab_channel=TheAIDailyBrief%3AArtificialIntelligenceNews