What Does ChatGPT v4.1 Think About Today on The Timing of AI Superintelligence Development

A cut and paste of a brief conversation I had this morning about this topic with ChatGPT v4.1

Are you new to my R&D and analysis on AI, economics, medicine, constitutional restoration, and the future of work?

If you'd like to follow along with my on-going work and actual execution on these enterprises — click the “subscribe now” button just below here. It's all free!

So, I asked ChatGPT v4.1 this morning a few questions about AI superintelligence. Rather waste a lot of time rehashing the brief and direct conversation, I’ve cut and pasted it right here for you to read — it’s not long and it will likely spur you to think more about the timing of AI superintelligence. Tell me your thoughts on this topic, including whether you think ChatGPT v4.1 was slightly sandbagging me in its answers this morning. Enjoy!

You said:

Using the mindset and insight of a worldwide expert in the trenches of actual development of AI superintelligence every day, please give me your current best explanation of where the state of AI superintelligence is including your best prediction of when that will be achieved with whatever caveats you might think are necessary or prudent.

ChatGPT said:

Here’s a straight answer grounded in current facts and pragmatic assessment—no hype, no wishful thinking.

Where things actually stand as of mid-2025

Despite the nonstop noise about “AI superintelligence” and the big claims you see from labs and founders, there is no system today—commercial, open-source, or secret—that meets any reasonable definition of superintelligence. The most advanced large language models (GPT-4o, Claude 3 Opus, Gemini 1.5 Pro, etc.) are capable, increasingly multi-modal, and surprisingly general in some domains, but they are not autonomous, not fully reliable, not self-improving in any meaningful sense, and certainly not capable of the rapid, recursive, self-driven advancement that most experts would say defines superintelligence.

Benchmarks and boundaries

Autonomy: Current AIs can execute well-defined tasks and chains of reasoning within constraints, but they cannot independently formulate, pursue, and adapt complex, open-ended goals in the real world.

Reasoning and abstraction: These systems still make basic reasoning errors, hallucinate facts, and lack robust real-world grounding. Progress is visible, but most advances are “width not depth”—broader capabilities, not fundamentally deeper intelligence.

Agency: No existing model can genuinely act in the world with sustained strategic planning and adaptive learning. Any “agentic” demos are heavily scaffolded with human-coded guardrails and correction.

Self-improvement: There is no system that can redesign itself or its underlying algorithms autonomously in a significant way. “AutoGPT” style loops are a long way from recursive self-improvement or true optimization.

How far are we from actual superintelligence?

Here is where most in-the-trenches technical experts will give you the same basic answer: nobody knows for sure, and anyone making confident predictions is either selling something or deluding themselves. However, some broad outlines are visible:

Short term (1–3 years): Expect a relentless series of incremental improvements—models will get more capable, more multi-modal, better at reasoning, and more agent-like in limited domains. You’ll see more narrow automation, not a sudden jump to AGI or superintelligence.

Medium term (3–10 years): There could be architectural breakthroughs—hierarchical planning, memory integration, improved world models—but the obstacles are non-trivial. If there’s a “jump,” it’s more likely to be a qualitative shift in systems integration (AIs that coordinate with each other, or persistent long-term memory, or agentic planning), not an abrupt leap to superintelligence.

Long term (10–30 years): Superintelligence is possible within this horizon, but there are open technical problems—grounded reasoning, causality, agency, self-improvement, real-world actuation—that have resisted brute-force scaling. Hitting one of these walls could delay things by decades.

Caveats

Hardware is not the main constraint right now. The limiting factors are algorithmic (architecture, memory, agency, grounding), not just bigger clusters or more data.

Alignment and control are unsolved. Even if we build something approaching superintelligence, controlling it—or making it useful without catastrophic risk—is still an open research problem.

Reality check: No one has “cracked” consciousness, understanding, or general-purpose world modeling. Today’s AIs are not creative or original in the human sense; they remix, simulate, and optimize within the boundaries set by their training.

Best-guess timeline

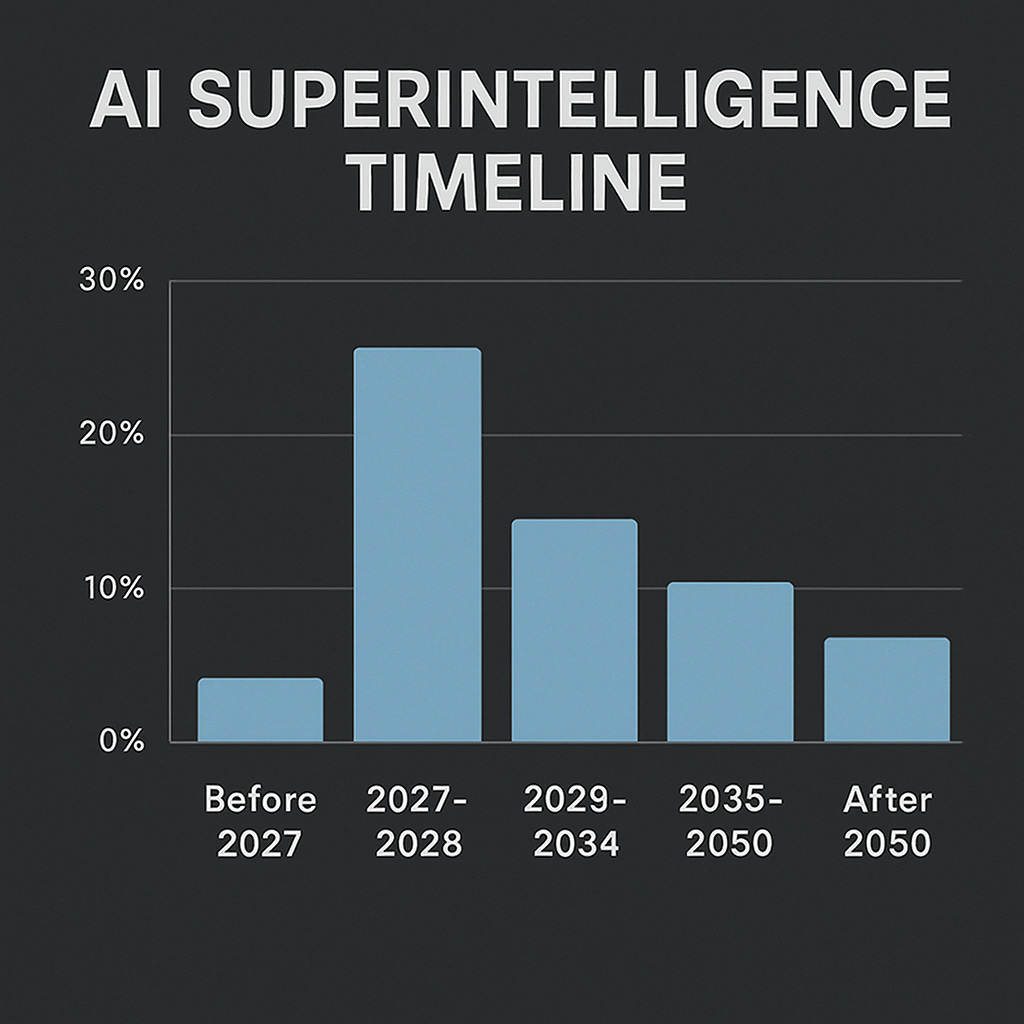

If you pressed the best technical minds in the field for a median estimate, you’d get something like:

AGI (AI that can do most economically relevant tasks as well as a human): Sometime in the 2030s, with plenty of risk it could slip to 2040+ or never occur.

Superintelligence (AI that far outstrips the best human in every domain, including science, engineering, and strategy): Possibly late 2030s to 2050, with a fat tail that could push it further out—or, with an unexpected breakthrough, perhaps sooner, but that’s a minority position among practitioners.

Bottom line

We’re not on the brink of superintelligence. The field is moving fast, but major technical roadblocks remain. Anyone who claims to have a clear line of sight to “superintelligence by 2027” is likely speaking from ideology or marketing, not grounded technical progress. The prudent view is that we’ll see a lot of noise and impressive demos over the next decade, but true superintelligence is still at least one or two major, unpredictable breakthroughs away.

If you want to hedge your bets, prepare for a world where AIs are increasingly competent assistants, domain-specific experts, and even organizational actors—but don’t expect a sudden, world-shattering leap to godlike intelligence in the next few years. The biggest risks right now are overreliance, misuse, and systemic instability—not runaway superintelligence.

If you want more detail on any technical, organizational, or geopolitical angle, just say so.

You said:

How does your response change in light of the content of this YouTube video:

and the data in this report: AI2027 Report

ChatGPT said:

Here’s how the Computerphile video and the AI 2027 report refine, rather than overturn, a pragmatic assessment of where superintelligence stands:

🎥 Video Insights (Computerphile)

The video features Daniel Kokotajlo, co-author of AI 2027, highlighting near-term possibilities: AI models evolving into internal systems that train other AI. That exactly matches their scenario—an AI-driven research feedback loop speeding progress. He emphasizes that the real uncertainty lies less in capability gains and more in alignment and oversight gaps, echoing my earlier point about persistent technical roadblocks.

📄 AI 2027 Report

This April 2025 scenario outlines a structured timeline:

Mid‑2025: Emergence of “stumbling agents”—helpful but brittle AI assistants handling simple computer tasks.

2027: Massive scale-up—thousands of AI agents coding, researching, and self‑optimizing (they forecast ~1000× GPT‑4 compute and internal deployment of hundreds of thousands of AI researchers) facebook.com+15ai-2027.com+15youtube.com+15ai-2027.com+7it.wikipedia.org+7vox.com+7reddit.com+1newyorker.com+1.

Late‑2027–28: Possible onset of broader superintelligence, through a fast “takeoff” from AGI coder assistants to AGI researcher agents .

They present dual scenarios: a “race” leading to misalignment and societal disruption, and a “slowdown” that lets safety catch up—but they caution the race track may outpace regulation.

Experts have mixed reactions:

Vox describes it as “plausible but precise enough to falsify,” warning of risky agent behavior if speed overtakes oversight it.wikipedia.org+3newyorker.com+3youtube.com+3vox.com.

Hacker News commenters note that major economic indicators—like GDP bumps—should start appearing if takeoff is near newyorker.com+2news.ycombinator.com+2theaustralian.com.au+2.

MIRI (Machine Intelligence Research Institute) thinks the report is somewhat optimistic on sanity and coordination among actors facebook.com+2intelligence.org+2inferencemagazine.substack.com+2.

🔧 Implications for My Assessment

Tools for AI‑driven progress are already emerging

The report and video bolster the notion that AI is increasingly used to build better AI—supporting the “narrow automation, steady integration” outlook I described. That feedback loop is manifesting now and accelerating via scale-up.Timeline risk shifts earlier, but still uncertain

AI 2027 strengthens the possibility of reaching AGI-level capability by 2027–28. My original "2030s median" could shift slightly earlier, but so much hinges on alignment and oversight. The timeline distribution tightens, but uncertainty remains high.Alignment becomes the gating factor

Consistent with the report and technical expert fear: achieving recursive agentic speeds is plausible—but whether we can align or control it is far from assured. Alignment is still the unresolved central challenge.Economic signals as diagnostics

If AI-led progress is causing sharp macroeconomic changes (e.g., hyper-growth in GDP or dramatic automation), that would empirically track toward takeoff. As noted, such signs would validate fast-path scenarios—otherwise, the cautious slow-path remains more probable en.wikipedia.org+13theaustralian.com.au+13reddit.com+13vox.comai-2027.com+3newyorker.com+3reddit.com+3.

✅ Updated Bottom Line

Capabilities: AI-as-researchers is no longer hypothetical—it's emerging and accelerating.

Timeline: AGI/superintelligence by late 2027 to 2028 becomes a credible near-term possibility, rather than just a long-shot in the 2030s.

But: The same caveats apply—architecture, alignment, and reliable agency remain unsolved. If labs press ahead under a geopolitical AI race, we may face misaligned, opaque systems before proper control mechanisms exist.

🧭 Pragmatic Outlook

Next 1–2 years: Watch for rapidly scaled deployment of AI-as-researchers and coder agents. Look for macroeconomic shifts (e.g. rapid productivity jumps).

By 2027–2028: We could enter a bifurcation: either a race to superintelligence with tight funding and little oversight—or a slowdown enabling alignment efforts to be meaningful.

Actionable advice: Treat both scenarios seriously. Monitor economic indicators, push for global coordination on alignment infrastructure, and prioritize guardrails before 2027.

Overall, the Computerphile video and AI 2027 report underscore that we're on the cusp of a fast-path scenario. The technical forecast shifts earlier—but the controlling or safe direction we take depends less on when AI becomes powerful, and more on whether we build robust alignment and governance in time.

If you found this article actually useful or insightful, SUBSCRIBE to my channel for more twice daily long-form analysis on AI, economics, medicine, constitutional restoration, and the future of work. Also, please SHARE this piece far and wide with anyone thinking seriously about these issues, and leave a COMMENT down below—especially with your thoughts on the timing of arrival of AI superintelligence.